Contents

- MAESpec_diffusion_fits.m

- Load perceptual learning data

- Design parameters

- Load diffusion data, MODEL 1 and MODEL 2

- Load diffusion data, MODEL 3

- Compare the two fits of MODEL 3

- Load diffusion data, MODEL 4

- Compare the two fits of MODEL 4

- Define Learning index (LI) and Specificity index (SI)

- Segment the data and calculate specificity indices

- This for loop is old (prior to Oct 2010). It is updated for 11 periods

- Descriptive statistics for the chi-squares for the saturated model

- G-squared statistic per subject for each of the 4 models:

- Fix the anomalous G-square values for sbj 363, MODEL 1

- Bayesian Information Criterion (BIC)

- BIC competition outcomes counted across the 27 subjects

- Specificity Indices (for all sbjs averaged together)

- dprime confidence interval calculations

- RT confidence interval calculations

- diffusion confidence interval calculations

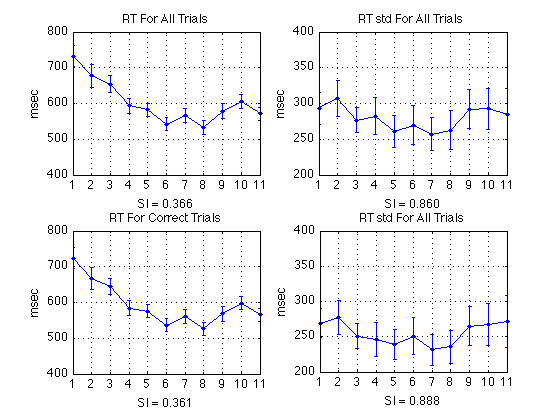

- Plot RT and RT std (sbjs averaged together)

- Plot dprime

- All subjects plot with separate deltas

- Plot diffusion params (averaged across all sbjs)

- Export data for the six-plot empirical figure, 2010-11-17

- Raw specificity values

- Diffusion paramter specificity values (avg across subjs)

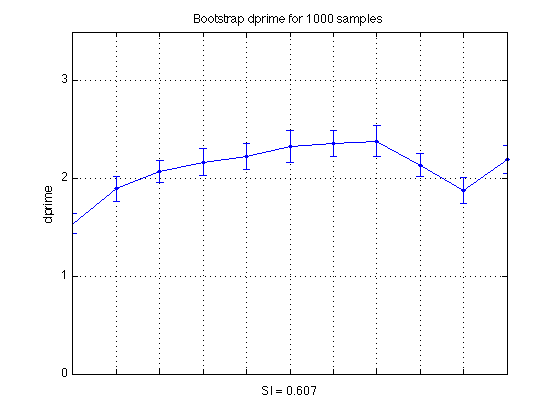

- Bootstrap Specificity Indices and Confidence Intervals

- B(1) contains the SIs and LIs on the full data set

- Average over all N_samples to find more stable SI values

- Average over all N_samples to find more stable LI values

- Collect distribution statistics of the 11-point profiles

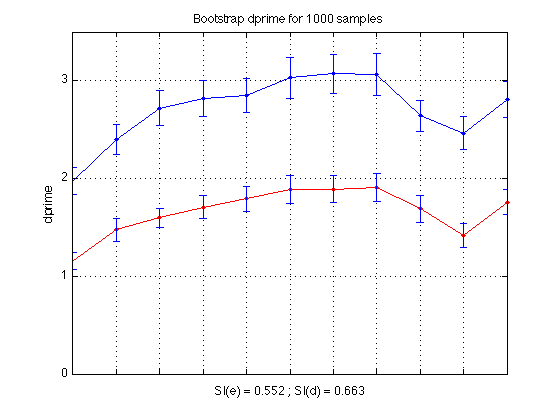

- Plot bootstrap dprime curve and SI value for single dprime

- Bootstrap-based dprime learning curves

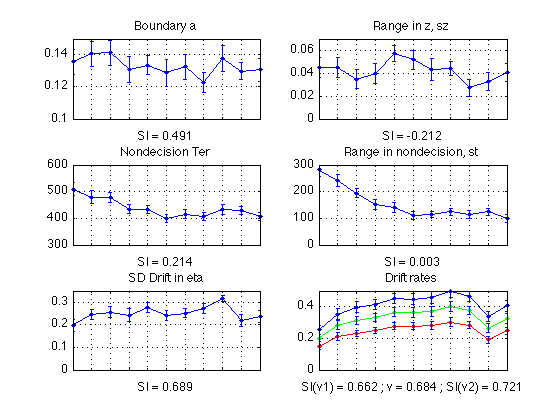

- Plot the resulting diffusion param bootstrap curves with SI written below

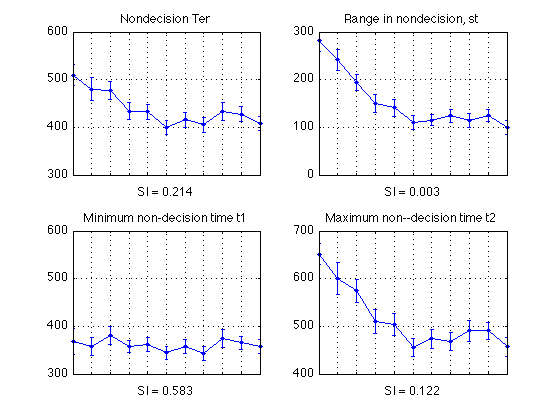

- Ter analysis

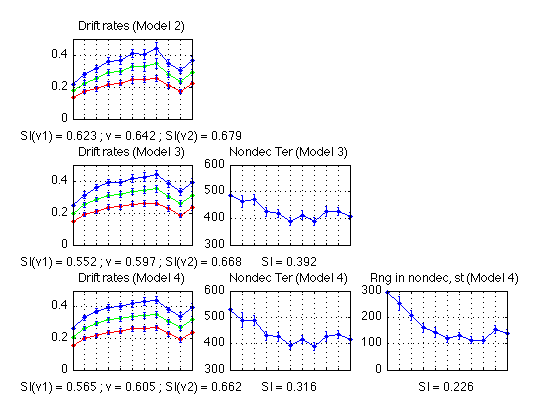

- New diffusion models (2-4)

- Plot Bootstrap RT and RT std (sbjs averaged together)

- Raw bootstrap percentiles

- Descriptive statistics for the on-line supplement, 2010-07-14

- Specificity-index summary table

- Learning-index summary table

- Bootstrap group-level Z-tests about specificity indices, accuracy

- Bootstrap group-level Z-tests about Learning indices, accuracy

- Bootstrap group-level Z-tests comparing the indices for Ter and MeanRT

- Individual-level Learning indices, accuracy and drift rates

- Paired-sample t-tests of LIs, individual-subject data

- Proportionality of easy and hard d'

- Within-sbj ANOVA for the boundary separation parameter a

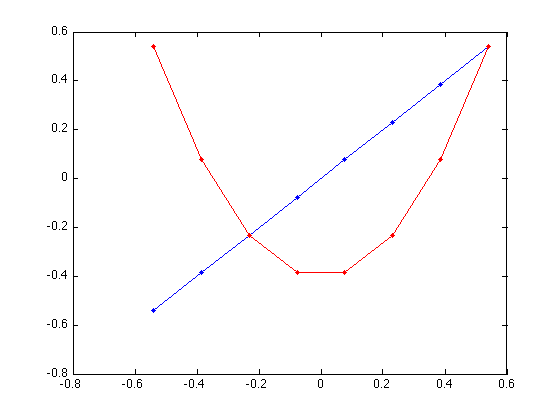

- Define linear and quadratic trend coefficients

- Linear trend analysis of boundary separation parameter a

- Quadratic trend analysis of boundary separation parameter a

- Linear (and quadratic) trend analysis of drift-stdev parameter eta

- Linear (and quadratic) trend analysis of starting-point range parameter sz

- Linear (and quadratic) trend analysis of easy drift rate v1

- Linear (and quadratic) trend analysis of difficult drift rate v2

- Linear (and quadratic) trend analysis of the average drift rate v_avg

- Linear (and quadratic) trend analysis of the average dprime

- Linear (and quadratic) trend analysis of the easy dprime

- Linear (and quadratic) trend analysis of the hard dprime

- Linear (and quadratic) trend analysis of the mean raw RT (sRT_all)

- Linear (and quadratic) trend analysis of mean nondecision time Ter

- Linear (and quadratic) trend analysis of nondecision-time range parameter st

- Linear (and quadratic) trend analysis of minimum nondecision time t1

- Linear (and quadratic) trend analysis of maximum nondecision time t2

- Bootstrap group-level Z-tests about boundary separation

- Bootstrap group-level Z-tests about nondecision times

- Descriptive statistics of the drop of the maximum nondecision time t2

- Descriptive statistics of the drop of the mean RT

MAESpec_diffusion_fits.m

Plot results of the diffusion-model analysis of MAESpec experiments

File: work/MLExper/MAESpec02/diffusion/MAESpec_diffusion_fits.m

Usage: publish('MAESpec_diffusion_fits.m','html') ; %

'latex','doc','ppt'% The following files will be created and re-used. To start fresh, these % files must first be deleted manually: % .../work/MLExper/MAESpec02/diffusion/H.mat (hitcount results) % .../work/MLExper/MAESpec02/diffusion/B.mat (bootstrap results) % (c) Laboratory for Cognitive Modeling and Computational Cognitive % Neuroscience at the Ohio State University, http://cogmod.osu.edu % % 1.4.5 2010-11-23 AAP -- Descriptive stats for the chi2 for the saturated model % 1.4.4 2010-11-17 AAP -- Export data for 6-panel Figure and Table 1 in PB&R % 1.4.3 2010-11-10 AAP -- Trend analyses for dprime and raw RTs % 1.4.2 2010-11-06 AAP -- diffusion_params_par11terb and ..._par11terstb % 1.4.1 2010-10-27 AAP -- G-squared and Bayesian Info Criterion (BIC) % 1.4.0 2010-10-22 AAP -- Trend analyses % 1.3.0 2010-10-18 NVH -- Added 3 d models. Forked script into 11-period version % 1.2.0 2010-07-06 AAP -- More z-tests. Learning indices % 1.1.1 2010-04-15 AAP -- z-tests instead of t-tests at the end % 1.1.0 2010-04-14 NVH -- Now includes SIs for the RTs, etc. % 1.0.0 2010-03-24 NVH -- Initial version

Load perceptual learning data

Raw subject data is loaded from .../work/MLEXper/MAESpec01/data & .../work/MLExper/MAESpec02/data

As of 2010-10-21: The stuff in this section is old. It is about d' and mean RTs. It generates H.mat, which is now stable.

cd(fullfile(MAESpec02_pathstr,'diffusion')) ; fprintf('\n\nMAESpec_diffusion_fits executed on %s.\n\n',datestr(now)) ; fprintf('cd %s\n',pwd) ; clear all ; filename = fullfile(MAESpec02_pathstr,'diffusion','H.mat') ; %recalculatep = true ; recalculatep = false ; if (recalculatep || ~exist(filename,'file')) data_file = fullfile(MAESpec02_pathstr,'diffusion','raw_data.dat'); if (~exist(data_file, 'file')) file_1 = fullfile(MAESpec01_pathstr,'data','sbj*.dat'); file_2 = fullfile(MAESpec02_pathstr,'data','sbj*.dat'); %- Concatenate the individual data files into one master ASCII file fprintf('!cat %s %s > %s \n', file_1, file_2, data_file) ; eval(['!cat ' file_1 ' ' file_2 ' > ' data_file]); end %- Import to Matlab fprintf('\nD=MAES02_import_data ...') ; D=MAES02_import_data(fullfile(MAESpec02_pathstr,'diffusion','raw_data.dat')) ; %- Calculate d-primes fprintf('\nH = MAES02_hitcount(D) ...\n') ; H = MAES02_hitcount(D) ; save(filename,'H') ; fprintf('\nsave %s \n\n',filename) ; else fprintf('load %s \n\n',filename) ; load(filename) ; end N_sbj = length(H) ;

MAESpec_diffusion_fits executed on 29-Nov-2010 17:38:44. cd /Users/apetrov/a/r/w/work/MLExper/MAESpec02/diffusion load /Users/apetrov/a/r/w/work/MLExper/MAESpec02/diffusion/H.mat

Design parameters

These are fixed.

P = MAES02_params(0) ; design_params = P.design_params ; task_sched = design_params.task_sched ; % 1=MAE, 2=discrim N_sessions = design_params.N_session ; N_discrim_sessions = N_sessions(2) ; % 5 sessions N_MAE_sessions = N_sessions(1); % 2 sessions MAE_blocks_session = design_params.blocks_session(1) ; % 7 blocks per session discrim_blocks_session = design_params.blocks_session(2) ; % 8 blocks per session N_MAE_blocks = N_MAE_sessions * MAE_blocks_session ; N_discrim_blocks = N_discrim_sessions * discrim_blocks_session ; trials_MAE_block = design_params.trials_block(1) ; % 12 trials per block trials_discrim_block = design_params.trials_block(2) ;% 120 trials per block N_MAE_trials = N_MAE_blocks * trials_MAE_block ; N_discrim_trials = N_discrim_blocks * trials_discrim_block ;

Load diffusion data, MODEL 1 and MODEL 2

These data are described in .../work/MLExper/MAESpec02/diffusion/diffusion_params.txt

As of 2010-10-21, this is a fully saturated DM fit in which all params are allowed to vary for each period and each subject. One period is half-day's worth (4 blocks in MAESpec01_params terminology), except period 9, which is the "rogue block" immediately after the second MAE session. See blocks_per_period = [4 4 4 4 4 4 4 4 1 4 3] below. In later sections, this diffusion model fit is implicitly referred to as MODEL_1. Whenever there is something with no explicit subscript, it should be implicitly understood that the subscript is 1.

N_periods_total = 11 ; % as of 2010-10-18 df_1 = load(fullfile(MAESpec02_pathstr, 'diffusion', 'diffusion_params.txt'), '-ascii'); assert(all(size(df_1)==[N_sbj*N_periods_total 11])) ; % Load the new diffusion models (requested by reviewers) % As of 2010-10-21, these are MODEL_2, MODEL_3, and MODEL_4, as follows: % ** MODEL_2 is what Roger labeled 'par11a' in his email of 2010-10-09. % In MODEL_2, only drift is allowed to vary across periods. The same % boundary separation a and the same Ter and st apply across the board % for each individual subject. df_2 = load(fullfile(MAESpec02_pathstr, 'diffusion', 'diffusion_params_par11a.txt'), '-ascii'); assert(all(size(df_2)==[N_sbj 31])) ;

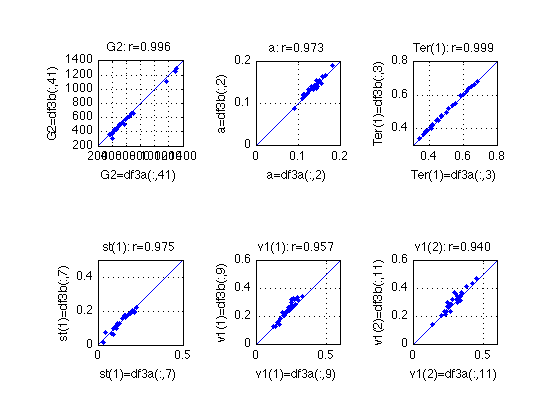

Load diffusion data, MODEL 3

** MODEL_3 is what Roger labeled 'par11tera' in his email of 2010-10-09. A second parameter search from a different starting point was performed on 2010-10-30 and emailed as 'par11terb'. In MODEL_3, both drift and Ter are allowed to vary across periods. The same boundary separation a and the same st apply across the board for each individual subject.

df_3a = load(fullfile(MAESpec02_pathstr, 'diffusion', 'diffusion_params_par11tera.txt'), '-ascii'); assert(all(size(df_3a)==[N_sbj 41])) ; df_3b = load(fullfile(MAESpec02_pathstr, 'diffusion', 'diffusion_params_par11terb.txt'), '-ascii'); assert(all(size(df_3b)==[N_sbj 40])) ; % Bring the two versions to a common data format df_3b = [df_3a(:,1) , df_3b] ; % append sbj_number as column 1 % The G-squared statistic (column 41) must be multiplied by 2 in par11tera % per Roger's email of 2010-10-27. The G^2 formula was corrected by the % time (2010-10-30) that par11terb was produced. % See Roger & Smith (2004, Psych Review, vol 111, equation on p. 343) df_3a(:,41) = df_3a(:,41).*2 ;

Compare the two fits of MODEL 3

idx = [41 2 3 7 9 11] ;

name = {'G2', 'a', 'Ter(1)', 'st(1)', 'v1(1)', 'v1(2)'} ;

ax = {[200 1400], [0 .2], [.300 .800], [0 .5], [0 .6], [0 .6]} ;

clf ;

for k = idx

kk = find(idx==k) ;

subplot(2,3,kk) ;

plot(df_3a(:,k),df_3b(:,k),'.');

axis([ax{kk} ax{kk}]) ; axis square ;

refline(1,0); grid on;

xlabel(sprintf('%s=df3a(:,%d)',name{kk},k));

ylabel(sprintf('%s=df3b(:,%d)',name{kk},k));

title(sprintf('%s: r=%.3f',name{kk},corr(df_3a(:,k),df_3b(:,k)))) ;

end

describe(df_3a(:,idx)-df_3b(:,idx),name) ;

% As the second run [df_3b=par11terb] yields lower G^2 values for all

% subjects, we'll adopt it for all subsequent calculations:

df_3 = df_3b ;

assert(all(df_3(:,41)<=df_3a(:,41))) ;

assert(all(df_3(:,41)<=df_3b(:,41))) ;

clear k name ax kk idx name

Mean Std.dev Min Q25 Median Q75 Max

------------------------------------------------------------

20.713 24.235 1.27 4.14 12.38 24.69 98.88 G2

-0.002 0.005 -0.01 -0.01 -0.00 -0.00 0.01 a

-0.003 0.005 -0.01 -0.01 -0.00 0.00 0.01 Ter(1)

0.000 0.012 -0.03 -0.01 -0.00 0.01 0.03 st(1)

-0.018 0.020 -0.07 -0.03 -0.01 -0.01 0.02 v1(1)

-0.015 0.025 -0.08 -0.02 -0.01 -0.00 0.03 v1(2)

------------------------------------------------------------

3.446 4.050 0.18 0.68 2.06 4.11 16.50

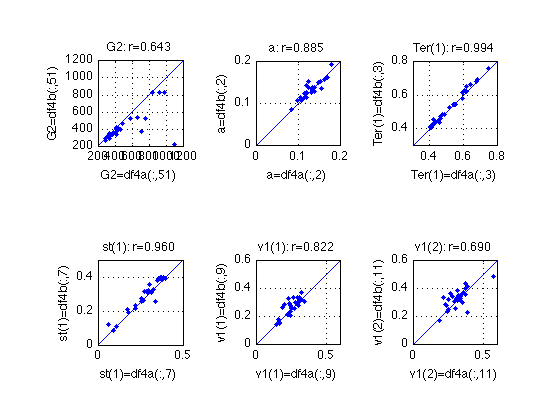

Load diffusion data, MODEL 4

** MODEL_4 is what Roger labeled 'par11tersta' in his email of 2010-10-09. A second parameter search from a different starting point was performed on 2010-10-30 and emailed as 'par11terstb'. In MODEL_4, three things (4 parameters total because there is easy and hard drift) are allowed to vary across periods. These are: - drift rate (easy and hard) - mean nondecision time Ter - range of the nondecision time distribution st The same boundary separation a applies across the board for each individual subject. Also the same eta and sz.

df_4a = load(fullfile(MAESpec02_pathstr, 'diffusion', 'diffusion_params_par11tersta.txt'), '-ascii'); assert(all(size(df_4a)==[N_sbj 51])) ; df_4b = load(fullfile(MAESpec02_pathstr, 'diffusion', 'diffusion_params_par11terstb.txt'), '-ascii'); assert(all(size(df_4b)==[N_sbj 50])) ; % Bring the two versions to a common data format df_4b = [df_4a(:,1) , df_4b] ; % append sbj_number as column 1 % The G-squared statistic (column 51) must be multiplied by 2 in par11tersta % per Roger's email of 2010-10-27. The G^2 formula was corrected by the % time (2010-10-30) that par11terstb was produced. % See Roger & Smith (2004, Psych Review, vol 111, equation on p. 343) df_4a(:,51) = df_4a(:,51).*2 ;

Compare the two fits of MODEL 4

idx = [51 2 3 7 9 11] ;

name = {'G2', 'a', 'Ter(1)', 'st(1)', 'v1(1)', 'v1(2)'} ;

ax = {[200 1200], [0 .2], [.300 .800], [0 .5], [0 .6], [0 .6]} ;

clf ;

for k = idx

kk = find(idx==k) ;

subplot(2,3,kk) ;

plot(df_4a(:,k),df_4b(:,k),'.');

axis([ax{kk} ax{kk}]) ; axis square ;

refline(1,0); grid on;

xlabel(sprintf('%s=df4a(:,%d)',name{kk},k));

ylabel(sprintf('%s=df4b(:,%d)',name{kk},k));

title(sprintf('%s: r=%.3f',name{kk},corr(df_4a(:,k),df_4b(:,k)))) ;

end

describe(df_4a(:,idx)-df_4b(:,idx),name) ;

% The fits aren't very stable. Among the two parameter sets for each

% subject, take the one that yields lower G^2. Note that there is no

% guarantee that this is the global minimum. In his email of 2010-10-30,

% Roger writes:

% "Some of the drift rates had not stabilized even after 7000 iterations.

% This was the largest number of drift rates fit in one program..."

df_4 = df_4b ;

idx = find(df_4(:,51)>df_4a(:,51)) ;

df_4(idx,:) = df_4a(idx,:) ;

df_4b(idx,[1 51]) % plot the subject numbers for whom the old fit was better

assert(all(df_4(:,51)<=df_4a(:,51))) ;

assert(all(df_4(:,51)<=df_4b(:,51))) ;

clear k name ax kk idx name

Mean Std.dev Min Q25 Median Q75 Max

------------------------------------------------------------

89.133 176.762 -16.16 15.03 34.61 82.42 883.65 G2

-0.002 0.010 -0.03 -0.01 0.00 0.00 0.02 a

-0.005 0.012 -0.03 -0.01 -0.00 0.00 0.02 Ter(1)

-0.013 0.027 -0.06 -0.03 -0.01 0.00 0.09 st(1)

-0.015 0.037 -0.10 -0.04 -0.01 0.01 0.04 v1(1)

-0.010 0.057 -0.12 -0.05 -0.01 0.02 0.16 v1(2)

------------------------------------------------------------

14.848 29.484 -2.75 2.48 5.76 13.74 147.33

ans =

356.0000 285.9980

362.0000 347.5690

Define Learning index (LI) and Specificity index (SI)

Input is a m-by-11 matrix of profiles. Output a m-by-1 vector of indices

assert(N_periods_total==11) ; % as of 2010-10-18 N_periods_train = 8 ; % 4 training sessions, each split in half %-- Learning index (LI) -- similar (but not identical) to that of Fine & Jacobs (2002) learn_idx = @(x) ((x(:,8)-x(:,1)) ./ x(:,1)) ; %-- Specificity index (SI) -- Ahissar & Hochstein (1997) specif_idx = @(x) ((x(:,8)-x(:,10)) ./ (x(:,8)-x(:,1))) ;

Segment the data and calculate specificity indices

These are all up-to-date as of 2010-10-21 -- all geared for 11 time periods

%[r,c] = recommend_subplot_size(N_sbj) ; r = 1; c = 2; M = 4.5 ; start_block = 4 ; end_block = 55 ; % Matrix of each subject's dprime and diffusion means for the 11 "blocks" of % interest % 1 row per sbj. 110 columns = dprime 1:11, then 11 columns per diffusion % parameter. Columns = % dprime; a; Ter; eta; sz; st; v1; v2 % followed by 11 columns of easy dprime and 11 columns of hard dprime sbj_means = NaN(N_sbj, 110) ; % G-squared goodness-of-fit statistic % See Roger & Smith (2004, Psych Review, vol 111, equation on p. 343) Gsq_1_per_period = NaN(N_sbj,N_periods_total) ; % G-squared for MODEL 1 % Matrix of specificty indices SI = NaN(N_sbj, N_periods_train) ; % using session 8 as the final day of training SIb = NaN(N_sbj, N_periods_train) ; % using session 8, block 1 as the final day of training SIc = NaN(N_sbj, N_periods_train) ; % using session 8, block 1 as the first day of *transfer* % Preallocate indv subject RT matrix sRT_all = NaN(N_sbj, N_periods_total) ; sRT_corr = NaN(N_sbj, N_periods_total) ; % RTs for correct trials only RTsd_all = NaN(N_sbj, N_periods_total) ; RTsd_corr = NaN(N_sbj, N_periods_total) ; %%%%%%%%%%%%%%%%%%%%%%% %%%%%%%%%%%%%%%%%%%%%%% % % Define indices so that so params can be called by name % These indices are relevant for MODEL_1 assert(N_periods_total==11) ; dpr = 1:11; a = 12:22; ter = 23:33; eta = 34:44; sz = 45:55; st = 56:66; v1 = 67:77; v2 = 78:88; edpr_idx = 89:99; hdpr_idx = 100:110; % 2010-10-20: The data layout of the various files that Roger emailed to us % over a period of several months is different. Thus, we need a separate % set of indices for each. % 2010-10-20: Define indices for the new diffusion MODELs 2 through 4. % These indices are relevant for MODEL_2 a_2 = 2 ; ter_2 = 3 ; eta_2 = 4 ; sz_2 = 5 ; st_2 = 7 ; v1_2 = 9:2:29 ; v2_2 = 10:2:30 ; gsq_2 = 31 ; % g squared value % These indices are relevant for MODEL_3 a_3 = 2 ; ter_3 = [3 31:40] ; eta_3 = 4 ; sz_3 = 5 ; st_3 = 7 ; v1_3 = 9:2:29 ; v2_3 = 10:2:30 ; gsq_3 = 41 ; % g squared value % These indices are relevant for MODEL_4 a_4 = 2 ; ter_4 = [3 31:40] ; eta_4 = 4 ; sz_4 = 5 ; st_4 = [7 41:50]; v1_4 = 9:2:29 ; v2_4 = 10:2:30 ; gsq_4 = 51 ; % g squared value

This for loop is old (prior to Oct 2010). It is updated for 11 periods

now (there used to be only 8 periods in the manuscript submitted to PBR on 2010-08-03). It is mostly about packaging, calculating means, and plotting d' and RTs.

for k=1:N_sbj % Average easy and hard dprime data into 11 periods like the % duffusion results. 4 blocks per period, except periods 9 & 11 dp11 = [mean(H(k).all_dprime(1:4))... % Session 1 (1/2) mean(H(k).all_dprime(5:8))... % Session 1 (2/2) mean(H(k).all_dprime(9:12))... % Session 2 (1/2) ... mean(H(k).all_dprime(13:16))... % Session 2 (2/2) ... mean(H(k).all_dprime(17:20))... % Session 3 (1/2) ... mean(H(k).all_dprime(21:24))... % Session 3 (2/2) mean(H(k).all_dprime(25:28))... % Session 4 (1/2) ... mean(H(k).all_dprime(29:32))... % Session 4 (2/2) mean(H(k).all_dprime(33))... % Session 5 *block 1* (1/3) mean(H(k).all_dprime(34:37))... % Session 5 (2/3) mean(H(k).all_dprime(38:40))]; % Session 5 (3/3) edp11 = [mean(H(k).easy_dprime(1:4))... % Session 1 (1/2) mean(H(k).easy_dprime(5:8))... % Session 1 (2/2) mean(H(k).easy_dprime(9:12))... % Session 2 (1/2) ... mean(H(k).easy_dprime(13:16))... % Session 2 (2/2) mean(H(k).easy_dprime(17:20))... % Session 3 (1/2) ... mean(H(k).easy_dprime(21:24))... % Session 3 (2/2) mean(H(k).easy_dprime(25:28))... % Session 4 (1/2) ... mean(H(k).easy_dprime(29:32))... % Session 4 (2/2) mean(H(k).easy_dprime(33))... % Session 5 *block 1* (1/3) mean(H(k).easy_dprime(34:37))... % Session 5 (2/3) mean(H(k).easy_dprime(38:40))]; % Session 5 (3/3) hdp11 = [mean(H(k).hard_dprime(1:4))... % Session 1 (1/2) mean(H(k).hard_dprime(5:8))... % Session 1 (2/2) mean(H(k).hard_dprime(9:12))... % Session 2 (1/2) ... mean(H(k).hard_dprime(13:16))... % Session 2 (2/2) mean(H(k).hard_dprime(17:20))... % Session 3 (1/2) ... mean(H(k).hard_dprime(21:24))... % Session 3 (2/2) mean(H(k).hard_dprime(25:28))... % Session 4 (1/2) ... mean(H(k).hard_dprime(29:32))... % Session 4 (2/2) mean(H(k).hard_dprime(33))... % Session 5 *block 1* (1/3) mean(H(k).hard_dprime(34:37))... % Session 5 (2/3) mean(H(k).hard_dprime(38:40))]; % Session 5 (3/3) % Collect RT statistics % Mean RT (of *median* block RTs) sRT_all(k,:) = [mean(H(k).RT_descr(1:4,5),1)... % Session 1 (1/2) mean(H(k).RT_descr(5:8,5),1)... % Session 1 (2/2) mean(H(k).RT_descr(9:12,5),1)... % Session 2 (1/2) ... mean(H(k).RT_descr(13:16,5),1)... % Session 2 (2/2) mean(H(k).RT_descr(17:20,5),1)... % Session 3 (1/2) ... mean(H(k).RT_descr(21:24,5),1)... % Session 3 (2/2) mean(H(k).RT_descr(25:28,5),1)... % Session 4 (1/2) ... mean(H(k).RT_descr(29:32,5),1)... % Session 4 (2/2) mean(H(k).RT_descr(33,5),1)... % Session 5 *block 1* (1/3) mean(H(k).RT_descr(34:37,5),1)... % Session 5 (2/3) mean(H(k).RT_descr(38:40,5),1)]; % Session 5 (3/3) sRT_corr(k,:) = [mean(H(k).RT_descr_corr(1:4,5),1)... % Session 1 (1/2) mean(H(k).RT_descr_corr(5:8,5),1)... % Session 1 (2/2) mean(H(k).RT_descr_corr(9:12,5),1)... % Session 2 (1/2) ... mean(H(k).RT_descr_corr(13:16,5),1)... % Session 2 (2/2) mean(H(k).RT_descr_corr(17:20,5),1)... % Session 3 (1/2) ... mean(H(k).RT_descr_corr(21:24,5),1)... % Session 3 (2/2) mean(H(k).RT_descr_corr(25:28,5),1)... % Session 4 (1/2) ... mean(H(k).RT_descr_corr(29:32,5),1)... % Session 4 (2/2) mean(H(k).RT_descr_corr(33,5),1)... % Session 5 *block 1* (1/3) mean(H(k).RT_descr_corr(34:37,5),1)... % Session 5 (2/3) mean(H(k).RT_descr_corr(38:40,5),1)]; % Session 5 (3/3) % std of RT across "blocks" RTsd_all(k,:) = [mean(H(k).RT_descr(1:4,2),1)... % Session 1 (1/2) mean(H(k).RT_descr(5:8,2),1)... % Session 1 (2/2) mean(H(k).RT_descr(9:12,2),1)... % Session 2 (1/2) ... mean(H(k).RT_descr(13:16,2),1)... % Session 2 (2/2) mean(H(k).RT_descr(17:20,2),1)... % Session 3 (1/2) ... mean(H(k).RT_descr(21:24,2),1)... % Session 3 (2/2) mean(H(k).RT_descr(25:28,2),1)... % Session 4 (1/2) ... mean(H(k).RT_descr(29:32,2),1)... % Session 4 (2/2) mean(H(k).RT_descr(33,2),1)... % Session 5 *block 1* (1/3) mean(H(k).RT_descr(34:37,2),1)... % Session 5 (2/3) mean(H(k).RT_descr(38:40,2),1)]; % Session 5 (3/3) RTsd_corr(k,:) = [mean(H(k).RT_descr_corr(1:4,2),1)... % Session 1 (1/2) mean(H(k).RT_descr_corr(5:8,2),1)... % Session 1 (2/2) mean(H(k).RT_descr_corr(9:12,2),1)... % Session 2 (1/2) ... mean(H(k).RT_descr_corr(13:16,2),1)... % Session 2 (2/2) mean(H(k).RT_descr_corr(17:20,2),1)... % Session 3 (1/2) ... mean(H(k).RT_descr_corr(21:24,2),1)... % Session 3 (2/2) mean(H(k).RT_descr_corr(25:28,2),1)... % Session 4 (1/2) ... mean(H(k).RT_descr_corr(29:32,2),1)... % Session 4 (2/2) mean(H(k).RT_descr_corr(33,2),1)... % Session 5 *block 1* (1/3) mean(H(k).RT_descr_corr(34:37,2),1)... % Session 5 (2/3) mean(H(k).RT_descr_corr(38:40,2),1)]; % Session 5 (3/3) % Store sbj dprime means sbj_means(k, dpr) = dp11 ; % Isolate diffusion params for the current subject idx = find(df_1(:,1)==H(k).sbj) ; % sanity check current_sbj = H(k).sbj ; assert(all(df_1(idx,1)==current_sbj)) ; assert(df_2(k,1)==current_sbj) ; assert(df_2(k,1)==current_sbj) ; assert(df_2(k,1)==current_sbj) ; % param "a" sbj_means(k,a) = df_1(idx, 2)'; % param "Ter" sbj_means(k,ter) = df_1(idx, 3)'; % param "eta" sbj_means(k,eta) = df_1(idx, 4)'; % param "sz" sbj_means(k,sz) = df_1(idx, 5)'; % param "st" sbj_means(k,st) = df_1(idx, 7)'; % param "v1" sbj_means(k,v1) = -df_1(idx, 9)'; % param "v2" sbj_means(k,v2) = -df_1(idx, 10)'; % G-squared goodness-of-fit statistic Gsq_1_per_period(k,:) = df_1(idx,11)' ; % easy dprime sbj_means(k,edpr_idx) = edp11 ; % difficult dprime sbj_means(k,hdpr_idx) = hdp11 ; % Easy/Hard specificity indices for plotting %e_SI = (edp8(5) - edp8(7)) / (edp8(5) - edp8(1)) ; %h_SI = (hdp8(5) - hdp8(7)) / (hdp8(5) - hdp8(1)) ; e_SI = specif_idx(edp11) ; h_SI = specif_idx(hdp11) ; % Specificity Indices using session 4 as final "block" of training data SI(k, :) = [ (sbj_means(k,dpr(8))-sbj_means(k,dpr(10)))/(sbj_means(k,dpr(8))-sbj_means(k,dpr(1))),... (sbj_means(k,a(8))-sbj_means(k,a(10)))/(sbj_means(k,a(8))-sbj_means(k,a(1))),... (sbj_means(k,ter(8))-sbj_means(k,ter(10)))/(sbj_means(k,ter(8))-sbj_means(k,ter(1))),... (sbj_means(k,eta(8))-sbj_means(k,eta(10)))/(sbj_means(k,eta(8))-sbj_means(k,eta(1))),... (sbj_means(k,sz(8))-sbj_means(k,sz(10)))/(sbj_means(k,sz(8))-sbj_means(k,sz(1))),... (sbj_means(k,st(8))-sbj_means(k,st(10)))/(sbj_means(k,st(8))-sbj_means(k,st(1))),... (sbj_means(k,v1(8))-sbj_means(k,v1(10)))/(sbj_means(k,v1(8))-sbj_means(k,v1(1))),... (sbj_means(k,v2(8))-sbj_means(k,v2(10)))/(sbj_means(k,v2(8))-sbj_means(k,v2(1)))] ; SIb(k, :) = [ (sbj_means(k,dpr(9))-sbj_means(k,dpr(10)))/(sbj_means(k,dpr(9))-sbj_means(k,dpr(1))),... (sbj_means(k,a(9))-sbj_means(k,a(10)))/(sbj_means(k,a(9))-sbj_means(k,a(1))),... (sbj_means(k,ter(9))-sbj_means(k,ter(10)))/(sbj_means(k,ter(9))-sbj_means(k,ter(1))),... (sbj_means(k,eta(9))-sbj_means(k,eta(10)))/(sbj_means(k,eta(9))-sbj_means(k,eta(1))),... (sbj_means(k,sz(9))-sbj_means(k,sz(10)))/(sbj_means(k,sz(9))-sbj_means(k,sz(1))),... (sbj_means(k,st(9))-sbj_means(k,st(10)))/(sbj_means(k,st(9))-sbj_means(k,st(1))),... (sbj_means(k,v1(9))-sbj_means(k,v1(10)))/(sbj_means(k,v1(9))-sbj_means(k,v1(1))),... (sbj_means(k,v2(9))-sbj_means(k,v2(10)))/(sbj_means(k,v2(9))-sbj_means(k,v2(1)))] ; SIc(k, :) = [ (sbj_means(k,dpr(8))-sbj_means(k,dpr(9)))/(sbj_means(k,dpr(8))-sbj_means(k,dpr(1))),... (sbj_means(k,a(8))-sbj_means(k,a(9)))/(sbj_means(k,a(8))-sbj_means(k,a(1))),... (sbj_means(k,ter(8))-sbj_means(k,ter(9)))/(sbj_means(k,ter(8))-sbj_means(k,ter(1))),... (sbj_means(k,eta(8))-sbj_means(k,eta(9)))/(sbj_means(k,eta(8))-sbj_means(k,eta(1))),... (sbj_means(k,sz(8))-sbj_means(k,sz(9)))/(sbj_means(k,sz(8))-sbj_means(k,sz(1))),... (sbj_means(k,st(8))-sbj_means(k,st(9)))/(sbj_means(k,st(8))-sbj_means(k,st(1))),... (sbj_means(k,v1(8))-sbj_means(k,v1(9)))/(sbj_means(k,v1(8))-sbj_means(k,v1(1))),... (sbj_means(k,v2(8))-sbj_means(k,v2(9)))/(sbj_means(k,v2(8))-sbj_means(k,v2(1)))] ; % % Plot dprime % subplot(r,c,1) ; % plot(1:11, edp11,'b.-') ; % hold on % plot(1:11, hdp11, 'r.-') ; % axis([1 11 0 M]) ; grid on ; % set(gca,'xtick',([1:11]),... % 'xticklabel',[],'ytick',(0:ceil(M))) ; % title(sprintf('Sbj %d, gr %d',H(k).sbj,H(k).group)) ; % ylabel('dprime'); % xlabel(sprintf('SI(e) = %0.3f ; SI(h) = %0.3f', e_SI, h_SI)); % hold off % % % Plot drift rates % subplot(r,c,2) ; % plot(1:11, sbj_means(k,v1), 'b.-'); % hold on % plot(1:11, sbj_means(k,v2), 'r.-'); % axis([1 11 0 1]) ; grid on ; % set(gca,'xtick',([1:11]),... % 'xticklabel',[],'ytick',(0:.1:1)) ; % title(sprintf('Sbj %d, gr %d',H(k).sbj,H(k).group)) ; % ylabel('drift rate'); % xlabel(sprintf('SI(v1) = %0.3f ; SI(v2) = %0.3f', SI(k,7), SI(k,8))); % legend('v1','v2','location','NorthEast'); % hold off end

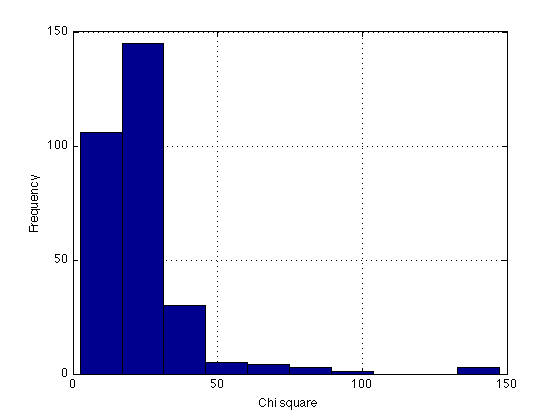

Descriptive statistics for the chi-squares for the saturated model

Added by Alex, 2010-11-23:

When Roger did his original fit (MODEL 1), he used ordinary chi-square to evaluate the goodness of fit. Then he switched to Gsq for Models 2, 3, and 4. The motivation for this was that Gsq is the mathematically appropriate term for the BIC calculation. Both are asymptotically distributed as chi-square with the same df. However, MODEL 1 (the saturated model) was never re-fit to optimize Gsq instead of chi2.

This is just as well because in the final manuscript we decided to stick to Model 1 (given that most parameters had statistically significant learning trends) and to report the standard chi-squared measure of fit.

%- Goodness-of-fit measure for the diffusion model % Chi-square values, as per Roger's email of 2010-01-19. % The 6-parameter DDM (z=a/2) was fit to individual data in each period, % after which the parameter values (and the chi-squares?) were averaged % across Ss to yield the following: chi2=[38.185 27.154 27.034 22.644 24.842 15.799 18.845 19.900] ; fprintf('\n Chi2 per Roger''s email: summed across blocks:') ; describe(chi2,'chi2') % This is close to the critical chi2=25.0=chi2inv(.95,15) % df=15, alpha=5% % This indicates excellent fits -- most of the discrepancy between model % and data can be attributed to sampling fluctuations. critical_chi2 = chi2inv(.95,15) %- Calculated from the imported DM fits: summed across Ss, varies across blocks block_legend = cell(N_periods_total,1) ; fprintf('\n Chi2 for saturated model: summed across Ss, varies across blocks:') ; for k=1:N_periods_total ; block_legend{k} = sprintf('Block %2d',k) ; end describe(Gsq_1_per_period,block_legend) ; % summed across all Ss %- Calculated from the imported DM fits: summed across blocks, varies across Ss sbj_legend = cell(N_sbj,1) ; fprintf('\n Chi2 for saturated model: summed across blocks, varies across Ss:') ; for k=1:N_sbj ; sbj_legend{k} = sprintf('Sbj %3d',H(k).sbj) ; end describe(Gsq_1_per_period',sbj_legend) ; % summed across all blocks %- Summed across everything fprintf('\n Chi2 for saturated model: summed across both Ss and blocks:') ; describe(Gsq_1_per_period(:)) ; clf ; hist(Gsq_1_per_period(:)) ; grid on xlabel('Chi square') ; ylabel('Frequency') ; % Number of significant deviations k = Gsq_1_per_period>critical_chi2 ; % [N_sbj x N_periods_total] fprintf('\n Number of significant chi2 in each block:\n %s \n' ... ,mat2str(sum(k))) ; fprintf('\n Number of significant chi2 in each subject:\n %s \n' ... ,mat2str(sum(k,2)')) ; fprintf('\n Total number of significant chi2: %d out of %d \n'... ,sum(k(:)), length(k(:))) ; % Number of large deviations acceptable_chi2 = 2*critical_chi2 k = Gsq_1_per_period>acceptable_chi2 ; % [N_sbj x N_periods_total] fprintf('\n Number of unacceptable chi2 in each block:\n %s \n' ... ,mat2str(sum(k))) ; fprintf('\n Number of unacceptable chi2 in each subject:\n %s \n' ... ,mat2str(sum(k,2)')) ; fprintf('\n Total number of unacceptable chi2: %d out of %d \n'... ,sum(k(:)), length(k(:))) ; k = sort(Gsq_1_per_period(:),'descend')' ; fprintf('\n The ten most deviating values:\n %s \n', mat2str(k(1:10))) ; clear k ;

Chi2 per Roger's email: summed across blocks:

Mean Std.dev Min Q25 Median Q75 Max

------------------------------------------------------------

24.300 6.895 15.80 19.37 23.74 27.09 38.19 chi2

critical_chi2 =

24.9958

Chi2 for saturated model: summed across Ss, varies across blocks:

Mean Std.dev Min Q25 Median Q75 Max

------------------------------------------------------------

36.115 34.193 10.63 18.05 22.46 30.38 141.71 Block 1

26.342 10.846 9.96 18.63 23.07 33.94 48.92 Block 2

23.645 9.965 9.22 15.46 21.83 30.17 52.82 Block 3

29.842 30.234 6.42 16.13 20.48 29.43 147.43 Block 4

23.272 12.604 9.90 16.80 21.78 26.81 77.81 Block 5

23.610 13.615 3.27 14.11 21.48 30.74 70.72 Block 6

19.757 7.572 8.24 14.28 18.38 23.59 38.66 Block 7

24.772 15.879 4.54 13.94 20.51 31.76 72.70 Block 8

14.760 5.833 4.94 10.44 13.89 20.55 23.91 Block 9

18.378 8.444 2.46 13.08 15.89 22.81 37.08 Block 10

19.295 7.834 5.58 14.50 18.59 22.10 40.73 Block 11

------------------------------------------------------------

23.617 14.274 6.83 15.04 19.85 27.48 68.41

Chi2 for saturated model: summed across blocks, varies across Ss:

Mean Std.dev Min Q25 Median Q75 Max

------------------------------------------------------------

24.320 11.509 10.91 15.43 21.40 33.28 46.90 Sbj 353

18.705 10.997 4.54 9.71 16.60 26.54 39.62 Sbj 354

21.325 7.710 11.43 15.75 19.04 27.60 35.27 Sbj 355

19.514 6.806 12.05 13.79 17.14 26.48 30.55 Sbj 356

25.630 21.531 11.19 14.10 18.56 21.89 86.32 Sbj 357

19.198 8.293 2.46 15.03 19.68 22.20 33.89 Sbj 359

40.724 40.957 9.22 14.22 28.24 45.88 141.71 Sbj 360

22.261 10.735 10.42 14.49 19.42 27.64 41.39 Sbj 362

34.154 28.968 4.94 14.37 20.41 62.80 81.65 Sbj 363

21.709 8.907 7.24 16.96 21.41 30.33 34.68 Sbj 364

17.953 6.609 6.42 13.53 18.22 21.49 28.48 Sbj 366

21.412 7.116 10.97 14.52 23.41 27.59 29.74 Sbj 367

24.616 6.135 15.50 21.10 22.98 28.88 36.05 Sbj 382

18.997 8.426 9.49 12.21 17.05 24.50 35.10 Sbj 383

16.155 4.581 6.68 14.22 14.89 19.98 23.23 Sbj 384

27.555 9.524 15.14 19.57 25.55 33.98 45.02 Sbj 385

18.793 5.862 9.11 15.14 18.20 24.48 26.23 Sbj 386

32.301 38.845 10.64 14.35 20.53 28.28 147.43 Sbj 387

21.584 9.534 9.71 12.47 22.09 25.71 42.10 Sbj 388

19.541 8.291 9.96 14.06 18.46 23.47 37.08 Sbj 389

30.981 22.691 8.59 16.49 20.73 51.28 70.72 Sbj 390

32.625 34.764 11.41 18.07 24.49 29.29 135.67 Sbj 391

28.329 11.476 17.14 18.83 21.49 38.66 48.06 Sbj 392

15.593 7.064 3.27 12.62 13.84 17.04 30.46 Sbj 393

18.678 8.072 6.60 13.58 18.59 26.80 29.83 Sbj 394

20.621 13.560 5.58 12.21 17.50 22.40 55.41 Sbj 395

24.389 13.506 10.07 13.66 21.78 32.26 52.82 Sbj 396

------------------------------------------------------------

23.617 13.795 9.28 14.83 20.06 29.66 53.53

Chi2 for saturated model: summed across both Ss and blocks:

Mean Std.dev Min Q25 Median Q75 Max

------------------------------------------------------------

23.617 17.476 2.46 14.22 20.18 27.88 147.43

Number of significant chi2 in each block:

[12 12 12 9 10 12 6 9 0 5 4]

Number of significant chi2 in each subject:

[4 3 3 3 2 2 6 4 5 3 2 5 5 3 0 7 2 4 4 2 3 5 5 1 3 2 3]

Total number of significant chi2: 91 out of 297

acceptable_chi2 =

49.9916

Number of unacceptable chi2 in each block:

[5 0 1 3 1 1 0 2 0 0 0]

Number of unacceptable chi2 in each subject:

[0 0 0 0 1 0 2 0 3 0 0 0 0 0 0 0 0 1 0 0 3 1 0 0 0 1 1]

Total number of unacceptable chi2: 13 out of 297

The ten most deviating values:

[147.431 141.713 135.674 91.887 86.317 81.652 77.808 72.7 70.716 65.188]

G-squared statistic per subject for each of the 4 models:

Added by Alex, 2010-10-26. Updated by Alex 2010-11-06.

Per Roger's email of 2010-10-27, the Gsq value in files par11a.txt (Model 2), par11tera.txt (Model 3), and par11tersta.txt (Model 4) must be multiplied by 2. (This constant was omitted in the code that computed them on 2010-10-09.) Model 1 (which was re-fitted block by block on 2010-10-21) has the correct values because the software bug was fixed by then. 2010-11-06: The multiplication of par11tera and par11tersta is performed above -- see the sections that compare with par11terb and par11terstb.

Gsq_1 = sum(Gsq_1_per_period,2) ; % does NOT need multiplication by 2 Gsq_2 = df_2(:,end) .*2 ; % multiplied by 2 per Roger's email of 2010-10-27 Gsq_3 = df_3(:,end) ; % no need to multiply by 2, 2010-11-06 Gsq_4 = df_4(:,end) ; % no need to multiply by 2, 2010-11-06 Gsquared = [Gsq_1 Gsq_2 Gsq_3 Gsq_4 df_2(:,1)] ; % [M1 M2 M3 M4, sbj] fprintf(' Model1 Model2 Model3 Model4 G-squared \n') ; fprintf('%7.2f %7.2f %7.2f %7.2f for subject %3d \n',Gsquared') ; describe(Gsquared(:,1:4),{'Model 1', 'Model 2', 'Model 3', 'Model 4'}) % Sanity check fprintf('\n\nBecause the models are nested, the G^2 should decrease \n') ; fprintf('monotonically as the number of parameter increases. That is:\n'); fprintf('Model 1 < Model 4 < Model 3 < Model 2 \n\n') ; for k1 = 1:4 for k2 = (k1+1):4 fprintf('Model %d has lower G^2 than Model %d for %2d of the 27 Ss\n',... k1, k2, sum(Gsquared(:,k1)<Gsquared(:,k2))) ; end end clear k1 k2 % The constraint Gsquare(4) >= Gsquare(1) is violated for sbj 363: Gsquared((Gsquared(:,4)<Gsquared(:,1)),5)'

Model1 Model2 Model3 Model4 G-squared

267.52 1251.95 365.51 333.81 for subject 353

205.75 674.50 439.97 362.89 for subject 354

234.58 708.02 393.00 317.91 for subject 355

214.65 587.93 357.01 283.25 for subject 356

281.93 752.88 510.19 332.74 for subject 357

211.17 605.06 429.86 309.40 for subject 359

447.97 2476.23 1300.34 824.25 for subject 360

244.88 1072.65 538.19 331.41 for subject 362

375.69 583.35 375.50 287.25 for subject 363

238.80 1553.93 653.11 528.94 for subject 364

197.49 552.67 386.62 262.71 for subject 366

235.53 1874.95 608.50 468.22 for subject 367

270.77 1131.36 618.82 531.43 for subject 382

208.96 871.59 506.67 398.26 for subject 383

177.70 1332.98 590.98 401.22 for subject 384

303.10 941.01 536.42 420.13 for subject 385

206.72 635.38 415.43 333.06 for subject 386

355.31 2660.74 497.38 356.15 for subject 387

237.42 1169.28 409.95 357.82 for subject 388

214.96 1461.69 465.37 405.26 for subject 389

340.79 1347.14 654.44 519.12 for subject 390

358.87 1436.60 1247.09 825.78 for subject 391

311.62 1454.07 1106.72 829.73 for subject 392

171.52 1025.46 574.66 373.90 for subject 393

205.45 497.30 303.45 218.96 for subject 394

226.83 1706.92 498.83 319.19 for subject 395

268.28 1599.72 433.07 373.37 for subject 396

Mean Std.dev Min Q25 Median Q75 Max

------------------------------------------------------------

259.788 67.715 171.52 209.52 237.42 297.81 447.97 Model 1

1183.901 563.982 497.30 682.88 1131.36 1459.78 2660.74 Model 2

563.595 255.253 303.45 411.32 498.83 604.12 1300.34 Model 3

418.747 165.674 218.96 322.25 362.89 456.20 829.73 Model 4

------------------------------------------------------------

606.508 263.156 297.81 406.49 557.63 704.48 1309.69

Because the models are nested, the G^2 should decrease

monotonically as the number of parameter increases. That is:

Model 1 < Model 4 < Model 3 < Model 2

Model 1 has lower G^2 than Model 2 for 27 of the 27 Ss

Model 1 has lower G^2 than Model 3 for 26 of the 27 Ss

Model 1 has lower G^2 than Model 4 for 26 of the 27 Ss

Model 2 has lower G^2 than Model 3 for 0 of the 27 Ss

Model 2 has lower G^2 than Model 4 for 0 of the 27 Ss

Model 3 has lower G^2 than Model 4 for 0 of the 27 Ss

ans =

363

Fix the anomalous G-square values for sbj 363, MODEL 1

As of 2010-11-06, Sbj 363 violates the constraint Gsquare(4) >= Gsquare(1). There can be an unknown number of undetected errors, which we didn't detect because they didn't get so large as to reverse the inequalities.

As a temporary fix, we're just going to replace the anomalously large G-squares with those for the next model up for the same subject.

%Gsquared(:,3) = min(Gsquared(:,3),Gsquared(:,2)-.0001) ; % enforce M3 < M2 %Gsquared(:,4) = min(Gsquared(:,4),Gsquared(:,3)-.0001) ; % enforce M4 < M3 Gsquared(:,1) = min(Gsquared(:,1),Gsquared(:,4)-.0001) ; % enforce M1 < M4 % Now Model 1 should have the lowest Gsquare for each subject: assert(all(argmin(Gsquared(:,1:4)')'==1)) ; % % Print the corrected values % fprintf(' Model1 Model2 Model3 Model4 CORRECTED G-squared \n') ; % fprintf('%7.2f %7.2f %7.2f %7.2f for subject %3d \n',Gsquared') ; describe(Gsquared(:,1:4),{'Model 1', 'Model 2', 'Model 3', 'Model 4'}) % Sanity check -- this time everything should be either 0 or 27 fprintf('\n\nBecause the models are nested, the G^2 should decrease \n') ; fprintf('monotonically as the number of parameter increases. That is:\n'); fprintf('Model 1 < Model 4 < Model 3 < Model 2 \n\n') ; for k1 = 1:4 for k2 = (k1+1):4 fprintf('Model %d has lower G^2 than Model %d for %2d of the 27 Ss\n',... k1, k2, sum(Gsquared(:,k1)<Gsquared(:,k2))) ; end end clear k1 k2

Mean Std.dev Min Q25 Median Q75 Max

------------------------------------------------------------

256.512 63.926 171.52 209.52 237.42 285.92 447.97 Model 1

1183.901 563.982 497.30 682.88 1131.36 1459.78 2660.74 Model 2

563.595 255.253 303.45 411.32 498.83 604.12 1300.34 Model 3

418.747 165.674 218.96 322.25 362.89 456.20 829.73 Model 4

------------------------------------------------------------

605.689 262.209 297.81 406.49 557.63 701.51 1309.69

Because the models are nested, the G^2 should decrease

monotonically as the number of parameter increases. That is:

Model 1 < Model 4 < Model 3 < Model 2

Model 1 has lower G^2 than Model 2 for 27 of the 27 Ss

Model 1 has lower G^2 than Model 3 for 27 of the 27 Ss

Model 1 has lower G^2 than Model 4 for 27 of the 27 Ss

Model 2 has lower G^2 than Model 3 for 0 of the 27 Ss

Model 2 has lower G^2 than Model 4 for 0 of the 27 Ss

Model 3 has lower G^2 than Model 4 for 0 of the 27 Ss

Bayesian Information Criterion (BIC)

Added by Alex, 2010-10-27 See Roger & Smith (2004, Psych Review, vol 111, equations on p. 342-343)

% Fix the N term in these formulas: N = number of obesrvations per condition. % In our case N=240 because there are 480 trials per "period" (= 4 blocks) % and they are divided into 240 easy and 240 difficult trials. The % distinction between left and right stimuli is ignored in the calculation % of quantiles. Also, the "rogue" period 9 is assumed (wrongly) to have % the same N as the other 10 periods. This should not affect the outcome % because it's just 1 period out of 11, the log-likelihood part is % calculated correctly, and the model-complexity part takes log(N). N_observations_per_condition = trials_discrim_block * 4 / 2 % 240 log_N_observ = log(N_observations_per_condition) % 5.48 = log(240) BIC = NaN(size(Gsquared)) ; BIC(:,5) = Gsquared(:,5) ; % subject number % Number of parameters for each of the 4 models: % MODEL 1: Saturated model -- 7 parameters for each of the 11 blocks: % The 7 params are: v1, v2, Ter, st, sz, a, eta N_params.model1 = 7*N_periods_total ; BIC(:,1) = Gsquared(:,1) + log_N_observ*N_params.model1 ; % MODEL 2: This is the most constrained model, with the fewest params. % In MODEL_2, only drift is allowed to vary across periods. The same % boundary separation a and the same Ter and st apply across the board % for each individual subject. % This makes for 5 + 2*11 = 27 parameters N_params.model2 = 5 + 2*N_periods_total ; % 2=[v1 v2] BIC(:,2) = Gsquared(:,2) + log_N_observ*N_params.model2 ; % MODEL 3: Both drift and Ter are allowed to vary across periods. % The same boundary separation a and the same st apply across the board % for each individual subject. % This makes for 4 + 3*11 = 37 parameters N_params.model3 = 4 + 3*N_periods_total ; % 3=[v1 v2 Ter] BIC(:,3) = Gsquared(:,3) + log_N_observ*N_params.model3 ; % MODEL_4: Three things (4 parameters total because there is easy and % hard drift) are allowed to vary across periods. These are: % - drift rate (easy and hard) % - mean nondecision time Ter % - range of the nondecision time distribution st % The same boundary separation a applies across the board for each % individual subject. Also the same eta and sz. % This makes for 3 + 4*11 = 47 parameters N_params.model4 = 3 + 4*N_periods_total % 4=[v1 v2 Ter st] BIC(:,4) = Gsquared(:,4) + log_N_observ*N_params.model4 ; % The "winner" model for each subject is the one with the lowest BIC: BIC_winner = argmin(BIC(:,1:4)')' ; fprintf('**** Approximate BIC *****\n') ; fprintf(' Model1 Model2 Model3 Model4 =Sbj= Win\n') ; fprintf('%7.2f %7.2f %7.2f %7.2f =%3d= %d \n',[BIC BIC_winner]') ; fprintf(' Model1 Model2 Model3 Model4 =Sbj= Win\n') ; describe(BIC(:,1:4),{'Model 1', 'Model 2', 'Model 3', 'Model 4'}) xtab1(BIC_winner)

N_observations_per_condition =

240

log_N_observ =

5.4806

N_params =

model1: 77

model2: 27

model3: 37

model4: 47

**** Approximate BIC *****

Model1 Model2 Model3 Model4 =Sbj= Win

689.53 1399.92 568.30 591.40 =353= 3

627.76 822.47 642.76 620.48 =354= 4

656.59 856.00 595.78 575.50 =355= 4

636.66 735.91 559.80 540.84 =356= 4

703.94 900.85 712.97 590.33 =357= 4

633.18 753.04 632.64 566.99 =359= 4

869.98 2624.20 1503.13 1081.85 =360= 1

666.89 1220.63 740.98 589.00 =362= 4

709.26 731.33 578.29 544.84 =363= 4

660.81 1701.91 855.89 786.53 =364= 1

619.50 700.65 589.40 520.30 =366= 4

657.54 2022.93 811.28 725.81 =367= 1

692.78 1279.34 821.61 789.03 =382= 1

630.97 1019.57 709.45 655.85 =383= 1

599.71 1480.96 793.76 658.81 =384= 1

725.11 1088.99 739.21 677.72 =385= 4

628.73 783.36 618.21 590.65 =386= 4

777.32 2808.72 700.16 613.74 =387= 4

659.43 1317.26 612.73 615.41 =388= 3

636.96 1609.67 668.15 662.85 =389= 1

762.80 1495.12 857.22 776.71 =390= 1

780.88 1584.57 1449.87 1083.37 =391= 1

733.63 1602.05 1309.50 1087.32 =392= 1

593.53 1173.44 777.44 631.49 =393= 1

627.46 645.28 506.23 476.55 =394= 4

648.84 1854.90 701.62 576.78 =395= 4

690.29 1747.70 635.86 630.96 =396= 4

Model1 Model2 Model3 Model4 =Sbj= Win

Mean Std.dev Min Q25 Median Q75 Max

------------------------------------------------------------

678.521 63.926 593.53 631.52 659.43 707.93 869.98 Model 1

1331.879 563.982 645.28 830.85 1279.34 1607.76 2808.72 Model 2

766.379 255.253 506.23 614.10 701.62 806.90 1503.13 Model 3

676.337 165.674 476.55 579.84 620.48 713.79 1087.32 Model 4

------------------------------------------------------------

863.279 262.209 555.40 664.08 815.22 959.10 1567.28

Value Count Percent Cum_cnt Cum_pct

-------------------------------------------

1 11 40.74 11 40.74

3 2 7.41 13 48.15

4 14 51.85 27 100.00

-------------------------------------------

BIC competition outcomes counted across the 27 subjects

Added by Alex, 2010-10-27 For BIC, lower is better.

for k1 = 1:4 for k2 = (k1+1):4 fprintf('Model %d has lower BIC than Model %d for %2d of the 27 Ss\n',... k1, k2, sum(BIC(:,k1)<BIC(:,k2))) ; end end clear k1 k2

Model 1 has lower BIC than Model 2 for 27 of the 27 Ss Model 1 has lower BIC than Model 3 for 16 of the 27 Ss Model 1 has lower BIC than Model 4 for 11 of the 27 Ss Model 2 has lower BIC than Model 3 for 0 of the 27 Ss Model 2 has lower BIC than Model 4 for 0 of the 27 Ss Model 3 has lower BIC than Model 4 for 2 of the 27 Ss

Specificity Indices (for all sbjs averaged together)

plus confidence interval calculations This section is old. Was updated to 11 periods 2010-10-20.

% Grab all sbjs' dprime data by block adpr = [H.all_dprime] ; % [40, N_subjects] edpr = [H.easy_dprime] ; hdpr = [H.hard_dprime] ; adpr_mean = mean(adpr,2) ; edpr_mean = mean(edpr,2) ; hdpr_mean = mean(hdpr,2) ; % Aggregate blocks into 11 "periods": [40, N_sbj] --> [11, N_sbj] dp11 = [mean(adpr(1:4,:),1);... % Session 1 (1/2) mean(adpr(5:8,:),1);... % Session 1 (2/2) mean(adpr(9:12,:),1);... % Session 2 (1/2) ... mean(adpr(13:16,:),1);... % Session 2 (2/2) mean(adpr(17:20,:),1);... % Session 3 (1/2) ... mean(adpr(21:24,:),1);... % Session 3 (2/2) mean(adpr(25:28,:),1);... % Session 4 (1/2) ... mean(adpr(29:32,:),1);... % Session 4 (2/2) mean(adpr(33,:),1);... % Session 5 *block 1* (1/3) mean(adpr(34:37,:),1);... % Session 5 (2/3) mean(adpr(38:40,:),1)]; % Session 5 (3/3) edp11 = [mean(edpr(1:4,:),1);... % Session 1 (1/2) mean(edpr(5:8,:),1);... % Session 1 (2/2) mean(edpr(9:12,:),1);... % Session 2 (1/2) ... mean(edpr(13:16,:),1);... % Session 2 (2/2) mean(edpr(17:20,:),1);... % Session 3 (1/2) ... mean(edpr(21:24,:),1);... % Session 3 (2/2) mean(edpr(25:28,:),1);... % Session 4 (1/2) ... mean(edpr(29:32,:),1);... % Session 4 (2/2) mean(edpr(33,:),1);... % Session 5 *block 1* (1/3) mean(edpr(34:37,:),1);... % Session 5 (2/3) mean(edpr(38:40,:),1)]; % Session 5 (3/3) hdp11 = [mean(hdpr(1:4,:),1);... % Session 1 (1/2) mean(hdpr(5:8,:),1);... % Session 1 (2/2) mean(hdpr(9:12,:),1);... % Session 2 (1/2) ... mean(hdpr(13:16,:),1);... % Session 2 (2/2) mean(hdpr(17:20,:),1);... % Session 3 (1/2) ... mean(hdpr(21:24,:),1);... % Session 3 (2/2) mean(hdpr(25:28,:),1);... % Session 4 (1/2) ... mean(hdpr(29:32,:),1);... % Session 4 (2/2) mean(hdpr(33,:),1);... % Session 5 *block 1* (1/3) mean(hdpr(34:37,:),1);... % Session 5 (2/3) mean(hdpr(38:40,:),1)]; % Session 5 (3/3) % Avg RT across all subjects aRT_all = mean(sRT_all,1) ; aRT_corr = mean(sRT_corr,1) ; aRTsd_all = mean(RTsd_all,1) ; aRTsd_corr = mean(RTsd_corr,1) ;

dprime confidence interval calculations

%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%% % This section is old. Was updated to 11 periods 2010-10-20. % Subtract individual subjects' means to estimate within-sbj variance % All dprime madpr_by_sbj = mean(dp11); % [1 x N_subjects] adpr_centered = dp11 - repmat(madpr_by_sbj,N_periods_total,1) ; semadpr = std(adpr_centered,0,2); % Easy dprime medpr_by_sbj = mean(edp11); % [1 x N_subjects] edpr_centered = edp11 - repmat(medpr_by_sbj,N_periods_total,1) ; semedpr = std(edpr_centered,0,2); % Hard dprime mhdpr_by_sbj = mean(hdp11); % [1 x N_subjects] hdpr_centered = hdp11 - repmat(mhdpr_by_sbj,N_periods_total,1) ; semhdpr = std(hdpr_centered,0,2); % within-sbj confidence intervals alpha = .90 ; % confidence level z_crit = norminv((1+alpha)/2) ; % 1.64 for alpha=90% CI_madpr = z_crit.*(semadpr./repmat(sqrt(N_sbj),N_periods_total,1)) ; CI_medpr = z_crit.*(semedpr./repmat(sqrt(N_sbj),N_periods_total,1)) ; CI_mhdpr = z_crit.*(semhdpr./repmat(sqrt(N_sbj),N_periods_total,1)) ;

RT confidence interval calculations

%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%% % This section is old. Was updated to 11 periods 2010-10-20. % All RTs RT_all_centered = sRT_all - repmat(aRT_all, 27, 1); seRT_all = std(RT_all_centered,0,1) ; % Correct RTs RT_corr_centered = sRT_corr - repmat(aRT_corr, 27, 1); seRT_corr = std(RT_corr_centered,0,1) ; % All RT std RTsd_all_centered = RTsd_all - repmat(aRTsd_all, 27, 1); seRTsd_all = std(RTsd_all_centered,0,1) ; % Correct RT std RTsd_corr_centered = RTsd_corr - repmat(aRTsd_corr, 27, 1); seRTsd_corr = std(RTsd_corr_centered,0,1) ; % within-sbj confidence intervals CI_RT_all = z_crit.*(seRT_all./repmat(sqrt(N_sbj),1,N_periods_total)) ; CI_RT_corr = z_crit.*(seRT_corr./repmat(sqrt(N_sbj),1,N_periods_total)) ; CI_RTsd_all = z_crit.*(seRTsd_all./repmat(sqrt(N_sbj),1,N_periods_total)) ; CI_RTsd_corr = z_crit.*(seRTsd_corr./repmat(sqrt(N_sbj),1,N_periods_total)) ;

diffusion confidence interval calculations

%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%% % This section is old. Was updated to 11 periods 2010-10-20. % This is about MODEL_1 -- the saturated model. The other diffusion models % do not have enough parameters to calculate confidence intervals. % MODELs 2 through 4 get their confidence intervals through bootstrapping. % The current section was written before there was a bootstrap. % Subtract individual subjects' means to estimate within-sbj variance % a ma_by_sbj = mean(sbj_means(:,a),2)' ; % [N_periods_total x N_subjects] a_centered = sbj_means(:,a)' - repmat(ma_by_sbj, N_periods_total, 1) ; sea = std(a_centered,0,2) ; % ter mter_by_sbj = mean(sbj_means(:,ter),2)' ; % [N_periods_total x N_subjects] ter_centered = sbj_means(:,ter)' - repmat(mter_by_sbj, N_periods_total, 1) ; seter = std(ter_centered,0,2) ; % eta meta_by_sbj = mean(sbj_means(:,eta),2)' ; % [N_periods_total x N_subjects] eta_centered = sbj_means(:,eta)' - repmat(meta_by_sbj, N_periods_total, 1) ; seeta = std(eta_centered,0,2) ; % sz msz_by_sbj = mean(sbj_means(:,sz),2)' ; % [N_periods_total x N_subjects] sz_centered = sbj_means(:,sz)' - repmat(msz_by_sbj, N_periods_total, 1) ; sesz = std(sz_centered,0,2) ; % st mst_by_sbj = mean(sbj_means(:,st),2)' ; % [N_periods_total x N_subjects] st_centered = sbj_means(:,st)' - repmat(mst_by_sbj, N_periods_total, 1) ; sest = std(st_centered,0,2) ; % v1 mv1_by_sbj = mean(sbj_means(:,v1),2)' ; % [N_periods_total x N_subjects] v1_centered = sbj_means(:,v1)' - repmat(mv1_by_sbj, N_periods_total, 1) ; sev1 = std(v1_centered,0,2) ; % v2 mv2_by_sbj = mean(sbj_means(:,v2),2)' ; % [N_periods_total x N_subjects] v2_centered = sbj_means(:,v2)' - repmat(mv2_by_sbj, N_periods_total, 1) ; sev2 = std(v2_centered,0,2) ; % Tmin = t1 = Ter-st/2 [Added by Alex 2010-11-17] Tmin = sbj_means(:,ter)' - sbj_means(:,st)'./2 ; % [N_periods_total x N_subjects] mTmin_by_sbj = mean(Tmin) ; Tmin_centered = Tmin - repmat(mTmin_by_sbj, N_periods_total, 1) ; seTmin = std(Tmin_centered,0,2) ; % within-sbj confidence intervals CI_a = z_crit.*(sea./repmat(sqrt(N_sbj),N_periods_total,1)) ; CI_ter = z_crit.*(seter./repmat(sqrt(N_sbj),N_periods_total,1)) ; CI_eta = z_crit.*(seeta./repmat(sqrt(N_sbj),N_periods_total,1)) ; CI_sz = z_crit.*(sesz./repmat(sqrt(N_sbj),N_periods_total,1)) ; CI_st = z_crit.*(sest./repmat(sqrt(N_sbj),N_periods_total,1)) ; CI_v1 = z_crit.*(sev1./repmat(sqrt(N_sbj),N_periods_total,1)) ; CI_v2 = z_crit.*(sev2./repmat(sqrt(N_sbj),N_periods_total,1)) ; CI_Tmin = z_crit.*(seTmin./repmat(sqrt(N_sbj),N_periods_total,1)) ; % Average over subject dprimes (for SI calculations and plotting purposes) mdp11 = mean(dp11,2) ; medp11 = mean(edp11,2) ; mhdp11 = mean(hdp11,2) ; % RT Specificity Index (SI) % SI_RT_all = (aRT_all(5) - aRT_all(7)) / ... % (aRT_all(5) - aRT_all(1)); % SI_RT_corr = (aRT_corr(5) - aRT_corr(7)) / ... % (aRT_corr(5) - aRT_corr(1)); SI_RT_all = specif_idx(aRT_all); SI_RT_corr = specif_idx(aRT_corr); % RT std Specificity Index (SI) SI_RTsd_all = specif_idx(aRTsd_all); SI_RTsd_corr = specif_idx(aRTsd_corr); % Dprime Specificity Index (SI) SI_dpr = specif_idx(mdp11'); % Easy Dprime Specificity Index (SI) SI_edpr = specif_idx(medp11'); % Hard Dprime Specificity Index (SI) SI_hdpr = specif_idx(mhdp11'); % Parameter a Specificity Index SI_a = specif_idx(mean(sbj_means(:, a))); % Parameter ter Specificity Index SI_ter = specif_idx(mean(sbj_means(:, ter))); % Parameter eta Specificity Index SI_eta = specif_idx(mean(sbj_means(:, eta))); % Parameter sz Specificity Index SI_sz = specif_idx(mean(sbj_means(:, sz))); % Parameter st Specificity Index SI_st = specif_idx(mean(sbj_means(:, st))); % Drift rate 1 Specificity Index SI_v1 = specif_idx(mean(sbj_means(:, v1))); % Drift rate 2 Specificity Index SI_v2 = specif_idx(mean(sbj_means(:, v2)));

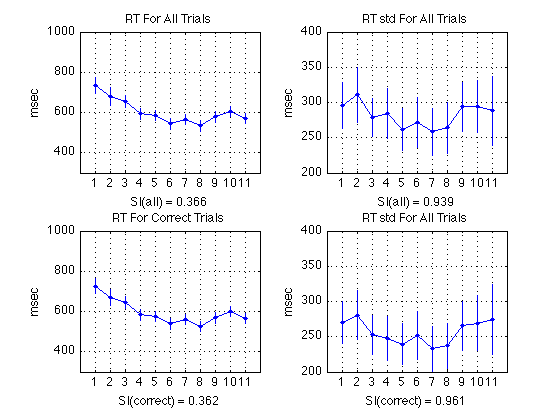

Plot RT and RT std (sbjs averaged together)

% All RTs subplot(2,2,1) ; plot(1:N_periods_total, aRT_all, 'b.-') ; axis([0 N_periods_total+1 300 1000]) ; grid on ; set(gca,'xtick',([1:N_periods_total])); title('RT For All Trials') ; ylabel('msec') ; xlabel(sprintf('SI(all) = %0.3f', SI_RT_all)); errorbar1(1:N_periods_total,aRT_all,CI_RT_all,['b' 'n']) ; % Correct RTs subplot(2,2,3) ; plot(1:N_periods_total, aRT_corr, 'b.-') ; axis([0 N_periods_total+1 300 1000]) ; grid on ; set(gca,'xtick',([1:N_periods_total])); title('RT For Correct Trials') ; ylabel('msec') ; xlabel(sprintf('SI(correct) = %0.3f', SI_RT_corr)); errorbar1(1:N_periods_total,aRT_corr,CI_RT_corr,['b' 'n']) ; % All RT std subplot(2,2,2) ; plot(1:N_periods_total, aRTsd_all, 'b.-') ; axis([0 N_periods_total+1 200 400]) ; grid on ; set(gca,'xtick',([1:N_periods_total])); title('RT std For All Trials') ; ylabel('msec') ; xlabel(sprintf('SI(all) = %0.3f', SI_RTsd_all)); errorbar1(1:N_periods_total,aRTsd_all,CI_RTsd_all,['b' 'n']) ; % Correct RT std subplot(2,2,4) ; plot(1:N_periods_total, aRTsd_corr, 'b.-') ; axis([0 N_periods_total+1 200 400]) ; grid on ; set(gca,'xtick',([1:N_periods_total])); title('RT std For All Trials') ; ylabel('msec') ; xlabel(sprintf('SI(correct) = %0.3f', SI_RTsd_corr)); errorbar1(1:N_periods_total,aRTsd_corr,CI_RTsd_corr,['b' 'n']) ;

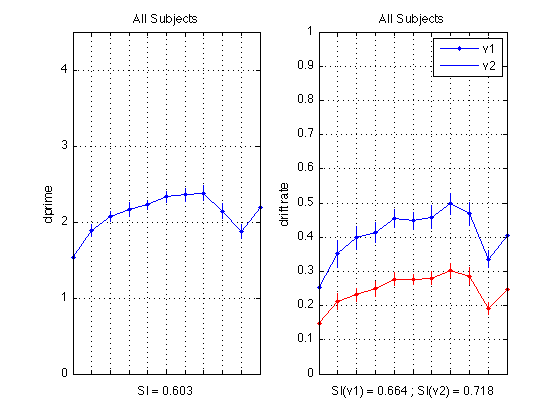

Plot dprime

CI = CI_madpr ; subplot(1,2,1) ; plot(1:N_periods_total, mdp11,'b.-') ; axis([1 N_periods_total 0 M]) ; grid on ; set(gca,'xtick',([1:N_periods_total]),... 'xticklabel',[],'ytick',(0:ceil(M))) ; title('All Subjects') ; ylabel('dprime'); xlabel(sprintf('SI = %0.3f', SI_dpr)); errorbar1(1:N_periods_total,mdp11,CI,['b' 'n']) ; % Plot drift rates subplot(1,2,2) ; plot(1:N_periods_total, mean(sbj_means(:,v1)), 'b.-'); CI = CI_v1 ; errorbar1(1:N_periods_total, mean(sbj_means(:,v1)), CI, ['b' 'n']); hold on plot(1:N_periods_total, mean(sbj_means(:,v2)), 'r.-'); CI = CI_v2 ; errorbar1(1:N_periods_total, mean(sbj_means(:,v2)), CI, ['r' 'n']); axis([1 N_periods_total 0 1]) ; grid on ; set(gca,'xtick',([1:N_periods_total]),... 'xticklabel',[],'ytick',(0:.1:1)) ; title('All Subjects') ; ylabel('drift rate'); xlabel(sprintf('SI(v1) = %0.3f ; SI(v2) = %0.3f', SI_v1, SI_v2)); legend('v1','v2','location','NorthEast'); hold off

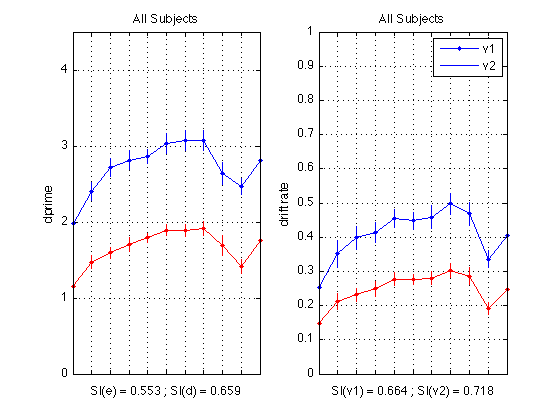

All subjects plot with separate deltas

Delta is easy=7 degrees versus difficult=4 degrees difference b/n clockwise and counterclockwise stimuli.

% Plot dprime subplot(1,2,1) ; plot(1:N_periods_total, medp11,'b.-') ; CI = CI_medpr ; errorbar1(1:N_periods_total,medp11,CI,['b' 'n']) ; hold on plot(1:N_periods_total, mhdp11,'r.-') ; CI = CI_mhdpr ; errorbar1(1:N_periods_total,mhdp11,CI,['r' 'n']) ; axis([1 N_periods_total 0 M]) ; grid on ; set(gca,'xtick',([1:N_periods_total]),... 'xticklabel',[],'ytick',(0:ceil(M))) ; title('All Subjects') ; ylabel('dprime'); xlabel(sprintf('SI(e) = %0.3f ; SI(d) = %0.3f', SI_edpr, SI_hdpr)); % Plot drift rates subplot(1,2,2) ; plot(1:N_periods_total, mean(sbj_means(:,v1)), 'b.-'); CI = CI_v1 ; errorbar1(1:N_periods_total, mean(sbj_means(:,v1)), CI, ['b' 'n']); hold on plot(1:N_periods_total, mean(sbj_means(:,v2)), 'r.-'); CI = CI_v2 ; errorbar1(1:N_periods_total, mean(sbj_means(:,v2)), CI, ['r' 'n']); axis([1 N_periods_total 0 1]) ; grid on ; set(gca,'xtick',([1:N_periods_total]),... 'xticklabel',[],'ytick',(0:.1:1)) ; title('All Subjects') ; ylabel('drift rate'); xlabel(sprintf('SI(v1) = %0.3f ; SI(v2) = %0.3f', SI_v1, SI_v2)); legend('v1','v2','location','NorthEast'); hold off

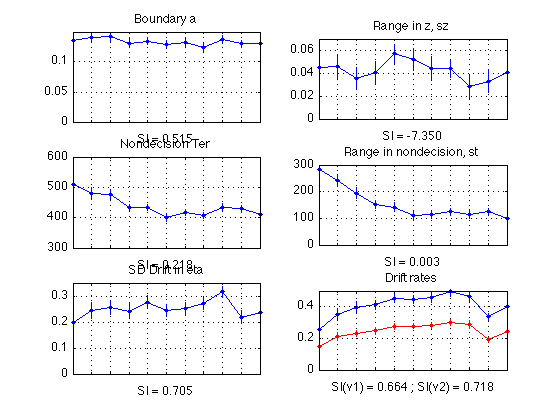

Plot diffusion params (averaged across all sbjs)

MODEL_1, non-bootstrapped, updated for 11 periods, as of 2010-10-21

subplot(3,2,1) ; % a plot(1:N_periods_total, mean(sbj_means(:, a)), 'b.-'); CI = CI_a ; errorbar1(1:N_periods_total, mean(sbj_means(:,a)), CI, ['b' 'n']); title('Boundary a'); xlabel(sprintf('SI = %0.3f', mean(SI_a))); set(gca,'xtick',([1:N_periods_total]),'xticklabel',[]); axis([1 N_periods_total 0 0.15]) ; grid on ; subplot(3,2,3) ; % ter plot(1:N_periods_total, mean(sbj_means(:, ter)*1000), 'b.-'); CI = CI_ter ; errorbar1(1:N_periods_total, mean(sbj_means(:,ter)*1000), CI*1000, ['b' 'n']); title('Nondecision Ter'); xlabel(sprintf('SI = %0.3f', mean(SI_ter))); set(gca,'xtick',([1:N_periods_total]),'xticklabel',[]); axis([1 N_periods_total 300 600]) ; grid on ; subplot(3,2,5) ; % eta plot(1:N_periods_total, mean(sbj_means(:, eta)), 'b.-'); CI = CI_eta ; errorbar1(1:N_periods_total, mean(sbj_means(:,eta)), CI, ['b' 'n']); title('SD Drift in eta'); xlabel(sprintf('SI = %0.3f', mean(SI_eta))); set(gca,'xtick',([1:N_periods_total]),'xticklabel',[]); axis([1 N_periods_total 0 0.35]) ; grid on ; subplot(3,2,2) ; % sz plot(1:N_periods_total, mean(sbj_means(:, sz)), 'b.-'); CI = CI_sz ; errorbar1(1:N_periods_total, mean(sbj_means(:,sz)), CI, ['b' 'n']); title('Range in z, sz'); xlabel(sprintf('SI = %0.3f', mean(SI_sz))); set(gca,'xtick',([1:N_periods_total]),'xticklabel',[]); axis([1 N_periods_total 0 0.07]) ; grid on ; subplot(3,2,4) ; % st plot(1:N_periods_total, mean(sbj_means(:, st)*1000), 'b.-'); CI = CI_st ; errorbar1(1:N_periods_total, mean(sbj_means(:,st)*1000), CI*1000, ['b' 'n']); title('Range in nondecision, st'); xlabel(sprintf('SI = %0.3f', mean(SI_st))); set(gca,'xtick',([1:N_periods_total]),'xticklabel',[]); axis([1 N_periods_total 0 300]) ; grid on ; % v1 subplot(3,2,6) ; plot(1:N_periods_total, mean(sbj_means(:, v1)), 'b.-'); CI = CI_v1 ; errorbar1(1:N_periods_total, mean(sbj_means(:,v1)), CI, ['b' 'n']); title('Drift rates'); xlabel(sprintf('SI(v1) = %0.3f ; SI(v2) = %0.3f', mean(SI_v1),mean(SI_v2))); set(gca,'xtick',([1:N_periods_total]),'xticklabel',[]); hold on % v2 plot(1:N_periods_total, mean(sbj_means(:, v2)), 'r.-'); CI = CI_v2 ; errorbar1(1:N_periods_total, mean(sbj_means(:,v2)), CI, ['r' 'n']); axis([1 N_periods_total 0 0.5]) ; grid on ; hold off

Export data for the six-plot empirical figure, 2010-11-17

These data were ported to R and used to generate the main data figure for the revised manuscript sent to PB&R in November 2010. They are the group-averaged parameters for the saturated model (MODEL_1) across the 11 time periods.

% Panel A fprintf('\n\nEasy dprime: Group mean: medp11 = mean(edp11,2)\n') ; fprintf(' %6.4f',medp11) ; fprintf('\n CI90 w/in sbj: CI_medpr\n') ; fprintf(' %6.4f',CI_medpr) ; fprintf('\n\nHard dprime: Group mean: medp11 = mean(hdp11,2)\n') ; fprintf(' %6.4f',mhdp11) ; fprintf('\n CI90 w/in sbj: CI_mhdpr\n') ; fprintf(' %6.4f',CI_mhdpr) ; fprintf('\n\nAverage dprime (not plotted): Group mean: mdp11 = mean(dp11,2)\n') ; fprintf(' %6.4f',mdp11) ; fprintf('\n CI90 w/in sbj: CI_madpr\n') ; fprintf(' %6.4f',CI_madpr) ; % Panel B fprintf('\n\nAverage mean RT (all trials): Group mean: aRT_all = mean(sRT_all,1)\n') ; fprintf(' %6.1f',aRT_all) ; fprintf('\n CI90 w/in sbj: CI_RT_all\n') ; fprintf(' %6.2f',CI_RT_all) ; % Panel C fprintf('\n\nEasy drift rate v1: mean(sbj_means(:, v1))\n') ; fprintf(' %6.4f',mean(sbj_means(:, v1))) ; fprintf('\n CI90 w/in sbj: CI_v1\n') ; fprintf(' %6.4f',CI_v1) ; fprintf('\n\nHard drift rate v2: mean(sbj_means(:, v2))\n') ; fprintf(' %6.4f',mean(sbj_means(:, v2))) ; fprintf('\n CI90 w/in sbj: CI_v2\n') ; fprintf(' %6.4f',CI_v2) ; % Panel D fprintf('\n\nMean nondecision time Ter: mean(sbj_means(:, ter)*1000)\n') ; fprintf(' %6.1f',mean(sbj_means(:, ter)*1000)) ; fprintf('\n CI90 w/in sbj: CI_ter*1000\n') ; fprintf(' %6.2f',CI_ter*1000) ; fprintf('\n\nRange in nondecision time st (not plotted): mean(sbj_means(:, st)*1000)\n') ; fprintf(' %6.1f',mean(sbj_means(:, st)*1000)) ; fprintf('\n CI90 w/in sbj: CI_st*1000\n') ; fprintf(' %6.2f',CI_st*1000) ; fprintf('\n\nMinimum nondecision time Tmin=t1: mean(Tmin,2)*1000\n') ; fprintf(' %6.1f',mean(Tmin,2)*1000) ; fprintf('\n CI90 w/in sbj: CI_Tmin*1000\n') ; fprintf(' %6.2f',CI_Tmin*1000) ; % Panel E fprintf('\n\nVariability in drift rate eta: mean(sbj_means(:, eta))\n') ; fprintf(' %6.4f',mean(sbj_means(:, eta))) ; fprintf('\n CI90 w/in sbj: CI_eta\n') ; fprintf(' %6.4f',CI_eta) ; % Panel F fprintf('\n\nBoundary separation a: mean(sbj_means(:, a))\n') ; fprintf(' %6.4f',mean(sbj_means(:, a))) ; fprintf('\n CI90 w/in sbj: CI_a\n') ; fprintf(' %6.4f',CI_a) ; % Not plotted fprintf('\n\nRange in starting point sz: mean(sbj_means(:, sz))\n') ; fprintf(' %6.4f',mean(sbj_means(:, sz))) ; fprintf('\n CI90 w/in sbj: CI_sz\n') ; fprintf(' %6.4f',CI_sz) ; fprintf('\n\nMAESpec_diffusion_fits executed on %s.\n\n',datestr(now)) ;

Easy dprime: Group mean: medp11 = mean(edp11,2) 1.9742 2.4024 2.7152 2.8128 2.8620 3.0312 3.0682 3.0706 2.6388 2.4638 2.8112 CI90 w/in sbj: CI_medpr 0.0946 0.1233 0.1219 0.1256 0.0949 0.1272 0.1314 0.1287 0.1476 0.1076 0.1409 Hard dprime: Group mean: medp11 = mean(hdp11,2) 1.1553 1.4729 1.5972 1.7069 1.7923 1.8890 1.8887 1.9091 1.6944 1.4125 1.7599 CI90 w/in sbj: CI_mhdpr 0.0773 0.0821 0.0729 0.0946 0.0602 0.0731 0.0846 0.0912 0.1305 0.0957 0.0868 Average dprime (not plotted): Group mean: mdp11 = mean(dp11,2) 1.5379 1.8925 2.0733 2.1644 2.2251 2.3292 2.3588 2.3805 2.1424 1.8721 2.1925 CI90 w/in sbj: CI_madpr 0.0746 0.0791 0.0674 0.0969 0.0577 0.0688 0.0854 0.0966 0.1029 0.0914 0.0901 Average mean RT (all trials): Group mean: aRT_all = mean(sRT_all,1) 734.2 679.7 655.1 594.0 583.7 543.3 566.7 533.5 579.3 607.0 571.9 CI90 w/in sbj: CI_RT_all 39.64 42.95 29.79 28.62 24.68 25.85 25.90 26.11 26.81 25.60 24.72 Easy drift rate v1: mean(sbj_means(:, v1)) 0.2541 0.3517 0.3980 0.4133 0.4546 0.4477 0.4588 0.4973 0.4682 0.3358 0.4050 CI90 w/in sbj: CI_v1 0.0280 0.0391 0.0337 0.0297 0.0267 0.0252 0.0336 0.0305 0.0332 0.0244 0.0312 Hard drift rate v2: mean(sbj_means(:, v2)) 0.1477 0.2118 0.2311 0.2497 0.2776 0.2769 0.2798 0.3023 0.2855 0.1913 0.2461 CI90 w/in sbj: CI_v2 0.0184 0.0243 0.0199 0.0221 0.0192 0.0145 0.0188 0.0204 0.0251 0.0166 0.0191 Mean nondecision time Ter: mean(sbj_means(:, ter)*1000) 510.0 479.9 477.6 433.8 433.4 400.6 416.4 406.0 433.3 428.6 409.0 CI90 w/in sbj: CI_ter*1000 26.19 21.09 16.37 9.19 8.98 12.81 10.30 9.44 12.85 10.84 13.18 Range in nondecision time st (not plotted): mean(sbj_means(:, st)*1000) 282.7 241.6 194.6 151.1 141.6 110.7 115.8 124.9 115.6 125.4 99.6 CI90 w/in sbj: CI_st*1000 28.45 22.91 21.61 15.39 17.78 13.87 12.67 13.26 16.76 11.55 16.41 Minimum nondecision time Tmin=t1: mean(Tmin,2)*1000 368.6 359.1 380.3 358.2 362.6 345.3 358.5 343.5 375.5 365.9 359.2 CI90 w/in sbj: CI_Tmin*1000 35.95 19.85 17.48 8.03 8.92 12.27 10.11 9.68 16.22 10.18 13.63 Variability in drift rate eta: mean(sbj_means(:, eta)) 0.1974 0.2446 0.2556 0.2412 0.2765 0.2433 0.2500 0.2707 0.3159 0.2191 0.2370 CI90 w/in sbj: CI_eta 0.0314 0.0298 0.0265 0.0295 0.0251 0.0240 0.0254 0.0226 0.0214 0.0268 0.0303 Boundary separation a: mean(sbj_means(:, a)) 0.1361 0.1415 0.1418 0.1316 0.1346 0.1292 0.1331 0.1238 0.1380 0.1301 0.1311 CI90 w/in sbj: CI_a 0.0099 0.0084 0.0068 0.0069 0.0056 0.0067 0.0069 0.0064 0.0065 0.0053 0.0062 Range in starting point sz: mean(sbj_means(:, sz)) 0.0454 0.0458 0.0353 0.0407 0.0570 0.0521 0.0440 0.0440 0.0284 0.0331 0.0411 CI90 w/in sbj: CI_sz 0.0105 0.0106 0.0097 0.0094 0.0084 0.0092 0.0106 0.0074 0.0104 0.0102 0.0091 MAESpec_diffusion_fits executed on 29-Nov-2010 17:38:51.

Raw specificity values

'Raw' in the sense that no bootstrapping is involved. Each row printed below represents a subject. Columns are: 1 = dprime 2 = a 3 = Ter 4 = eta 5 = sz 6 = st 7 = v1 8 = v2

% The following indices are calculated % As of Oct 2010, this code has not been changed since Aug 2010: % % SI: Indices with session 4 used as the final day of training and the % first half of session 5 used as the first day of transfer (minus % the first block) % SIb: Indices with session 5, block 1 used as the final day of training % and the first half of session 5 used as the first day of transfer % (minus the first block) % SIc: Indices with session 4, used as the final day of training and the % first block of session 5 used as the first day of "transfer" % (although this is not the transfer direction, but a continuation of % the training direction occurring after a session of MAE trials) SI %#ok<*NOPTS> SIb SIc

SI =

-0.2607 1.3000 0.0802 1.8889 0.5181 -0.4750 0.6696 0.5161

0.6547 0.9167 0.1774 1.6522 -0.2778 0.2548 1.1331 0.9415

0.7298 -0.9167 0 1.2553 -0.1034 -0.1894 0.4228 1.2258

0.2035 0.7711 1.7727 0.9346 Inf -0.1444 0.8756 0.8333

0.4970 -0.6444 0.1138 0 0.9615 0.0767 0.4468 0.3745

0.8770 -1.2222 0.0857 2.2973 -1.2000 -0.1172 0.9319 1.1188

0.6892 0.0328 1.6500 0.6055 0 0.1038 0.6734 0.7415

-0.1177 -0.1364 0.1327 0 0 -0.0844 -0.0605 -0.0778

0.9452 -3.8000 0.9577 1.0065 -Inf 0.8957 2.0185 0.6988

0.4311 0.2297 0.1311 NaN -0.1452 0.1517 0.1897 0.4954

0.6626 -0.2500 -0.1923 Inf 1.0000 0.2038 1.0489 0.8302

1.6666 0.6714 0.2077 0.4136 -0.7778 9.5000 0.8798 1.0328

0.5775 0.3750 0.4225 4.0714 0.5098 -0.0171 0.2868 0.5665

-0.2710 0.7838 2.8000 0.5263 0.7619 -0.0776 0.2710 0.4237

1.3976 0.1667 -0.0441 0.4884 0.1800 0.0144 0.7870 0.8058

0.1808 0.3731 0.2256 0.2727 0 -0.0636 0.5357 0.3448

0.8652 0 0.1839 -0.1895 -0.0388 -0.1286 0.6509 0.6864

1.2435 0.4694 -0.0945 Inf 0.6500 0.0916 1.0652 1.0762

0.2290 0.2000 0.0412 -1.6885 1.0000 0.1333 2.4921 2.5306

-0.7018 -1.4615 0 -15.6429 4.9286 -0.7632 2.2975 3.8750

0.5956 -5.0000 0.2840 28.6667 -4.0000 0.3663 1.1471 1.2000

0.0224 0.1892 0.0940 0.3533 -1.5172 -0.3412 0.4365 0.3806

1.3661 -1.2800 0.7101 0 -0.5893 -0.0247 0.2194 0.6243

0.6933 -0.1389 0.8462 0.5948 0 0.2233 0.8877 0.8919

0.5132 1.5455 0.1111 -0.1825 -1.6667 0.1024 0.3266 0.4081

0.5948 0.5536 0.0264 1.0556 -2.7222 -0.2554 -1.4634 0.3514

2.3037 -1.9444 0.1211 -1.0063 8.8750 0.1453 -0.3471 -1.1000

SIb =

-0.5717 0.9062 -0.1814 1.1622 0.6226 -1.0345 0.8348 0.7345

0.8224 1.0159 -0.0200 1.5310 -0.0147 -0.0473 1.1277 0.9588

0.6772 -0.3529 -0.1429 -3.0000 -Inf -0.4439 0.4538 -0.4000

0.2808 0.6984 0.7792 0.9296 2.9143 0.2961 0.9051 0.7222

-0.9064 0.0263 0.0763 0 -Inf 0.2136 -0.1094 -0.2836

0.7626 -0.2903 0.4921 2.2973 0.7027 -0.2361 0.8932 1.1739

0.5443 -0.9667 0.0250 0.7471 6.0000 0.0717 0.6957 0.7551

-0.1602 -2.1250 -6.7273 0 0 0.3949 -0.0273 -0.0112

0.8829 0.7000 0.9423 1.0274 -9.3333 0.7536 1.9016 1.2907

-0.5315 -0.3902 0.1264 NaN -Inf 0.0682 0.1856 -0.8644

0.5400 3.5000 -4.1667 0.8688 1.0000 0.5098 1.5625 3.2500

-6.1749 0.2813 0.2426 0.4974 -Inf -2.0357 0.8372 1.5714

0.4931 0.1818 0.0682 0.6417 0.7396 0.0556 0.2581 0.6364

-1.5157 0.4286 -1.5714 0.7300 0.9123 0.1935 0.2427 0.0377

1.4272 0.5000 -0.1639 0.4907 -0.2424 -0.2018 0.8145 0.8050

-0.1316 0.4545 0.2536 0.0345 0 0.2812 -0.0777 -0.0857

0.3192 0.2667 0.1839 0 0 0.1124 0.2377 0.2697

2.2789 -1.1667 -0.1583 1.0283 0.8923 0.0165 1.0898 1.0597

0.1014 6.0000 -1.1136 -4.4667 NaN 0.2778 2.6207 -1.0270

-0.4198 -3.5714 0.0780 -0.3314 0 -1.7917 -0.1716 -0.5753

0.5772 -0.2414 0.3980 2.2029 2.0526 0.1351 1.0820 1.1532

0.5466 -0.8182 -0.0495 0.6151 -1.4333 -0.0560 0.6606 0.6706

1.1675 -0.1176 1.5714 0 -0.7800 -0.0473 0.4158 0.7948

0.3447 -0.2424 2.5000 0.6737 0 -0.0335 0.8834 0.8660

0.2741 0.4545 0.2000 0 1.4000 0.3294 0.0163 -0.0126

0.6248 0.3902 -0.4832 0.9747 -0.1552 -0.2419 -0.0100 0.8812

1.2691 8.5714 0.0044 Inf 3.8636 -0.2645 -1.8596 1.6364

SIc =

0.1979 4.2000 0.2214 -4.4815 -0.2771 0.2750 -1.0000 -0.8226

-0.9441 6.2500 0.1935 -0.2283 -0.2593 0.2885 -0.0418 -0.4211

0.1631 -0.4167 0.1250 1.0638 1.0000 0.1762 -0.0569 1.1613

-0.1074 0.2410 4.5000 0.0719 -Inf -0.6257 -0.3111 0.4000

0.7362 -0.6889 0.0407 0 1.0000 -0.1742 0.5013 0.5127

0.4819 -0.7222 -0.8000 0 -6.4000 0.0962 0.3624 0.3168

0.3181 0.5082 1.6667 -0.5596 1.2000 0.0346 -0.0730 -0.0554

0.0367 0.6364 0.8878 0 0 -0.7922 -0.0323 -0.0659

0.5319 -15.0000 0.2676 0.7630 -Inf 0.5767 -0.1296 2.0361

0.6285 0.4459 0.0055 NaN 1.0000 0.0897 0.0051 0.7294

0.2664 1.5000 0.7692 Inf 0.0345 -0.6242 0.9130 1.0755

1.0929 0.5429 -0.0462 -0.1667 1.0000 3.8000 0.2618 0.9426

0.1665 0.2361 0.3803 9.5714 -0.8824 -0.0769 0.0388 -0.1921

0.4948 0.6216 1.7000 -0.7544 -1.7143 -0.3362 0.0374 0.4011

0.0695 -0.6667 0.1029 -0.0047 0.3400 0.1799 -0.1480 0.0041

0.2760 -0.1493 -0.0376 0.2468 0 -0.4798 0.5692 0.3966

0.8020 -0.3636 0 -0.1895 -0.0388 -0.2714 0.5421 0.5706

0.8096 0.7551 0.0551 -Inf -2.2500 0.0763 0.2739 -0.2762

0.1421 1.1600 0.5464 0.5082 1.0000 -0.2000 0.0794 1.7551

-0.1986 0.4615 -0.0847 -11.5000 4.9286 0.3684 2.1074 2.8250

0.0434 -3.8333 -0.1893 -22.0000 5.7500 0.2673 -0.7941 -0.3053

-1.1563 0.5541 0.1368 -0.6800 -0.0345 -0.2701 -0.6599 -0.8806

-1.1858 -1.0400 1.5072 0 0.1071 0.0216 -0.3361 -0.8307

0.5321 0.0833 1.1026 -0.2416 0 0.2484 0.0367 0.1931

0.3294 2.0000 -0.1111 -0.1825 7.6667 -0.3386 0.3154 0.4154

-0.0798 0.2679 0.3436 3.1944 -2.2222 -0.0109 -1.4390 -4.4595

-3.8451 1.3889 0.1172 1.0000 -1.7500 0.3240 0.5289 4.3000

Diffusion paramter specificity values (avg across subjs)

SI_a SI_ter SI_eta SI_sz SI_st SI_v1 SI_v2

SI_a =

0.5150

SI_ter =

0.2175

SI_eta =

0.7048

SI_sz =

-7.3500

SI_st =

0.0031

SI_v1 =

0.6641

SI_v2 =

0.7181

Bootstrap Specificity Indices and Confidence Intervals